Algorithms are not sufficient

The AI of today has a problem: it is costly. A current computer vision model, Training Resnet-152, is estimated to cost around 10 trillion floating-point operations, which is dwarfed by modern language models. Training GPT-3, OpenAI 's latest natural language model, is expected to cost 300 trillion floating-point operations, which on commercial GPUs costs at least $5 million. Compare this to the human brain, which has as little as a banana and a cup of coffee to remember faces, answer questions, and drive cars.

We went a long way. Special-purpose machines were the first computers. In 1822, the 'difference engine' was invented by the British mathematician Charles Babbage, which had the sole purpose of calculating polynomial functions. In 1958, for machine vision tasks, Cornell professor Frank Rosenblatt developed 'Mark I', a physical incarnation of a single-layer perceptron. In those early days, hardware and algorithms were one and the same.

With the implementation of the von-Neumann architecture, a chip design consisting of a computer processing unit, and a memory unit for data storage and program instructions, the unity of hardware and algorithm has shifted. This change in paradigm made it possible to create machines for general purposes, machines that can be programmed for any desired task. The architecture of von-Neumann has become the blueprint of the new digital computer.

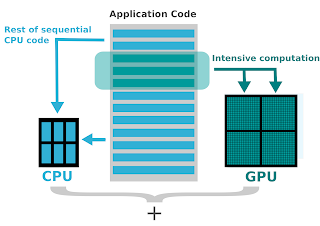

Yet, the catch is there. A lot of contact between the memory and computer units is needed by data-intensive programs, slowing down the computation. The explanation of why early attempts at AI failed is this 'von-Neumann bottleneck'. Standard CPUs, the central computational process in deep neural networks, are simply not efficient at big matrix multiplications. Early neural networks were too shallow and did not perform well because of the bottleneck in the current hardware.

That the solution to this issue came not from academia, but from the gaming industry is one of the ironies of history. GPUs, which were developed in the 1970s to speed video games, parallelize data-intensive operations with thousands of cores. An efficient solution to the von-Neumann bottleneck is this parallelism. GPUs have allowed deeper neural networks to be trained and have become the status quo for modern AI hardware.

AI research has a dimension of sheer luck to it then. This is named, fittingly, the 'hardware lottery' by Google researcher Sara Hooker: early AI researchers were clearly unfortunate because they were stuck with sluggish CPUs. Researchers who at the time GPUs came around 'won' the hardware lottery happened to be in the area. By training deep neural networks, fuelled by successful GPU acceleration, they could make rapid advances.

The problem with the hardware lottery is that it's difficult to explore anything different once the field as a whole has decided on a winner. Hardware production is slow and allows chip manufacturers with unpredictable returns to make significant upfront investments. For matrix multiplication, which has become the status quo, a safe bet is to simply optimize. However, this concentration on one specific combination of hardware and algorithm will limit our options in the long term.

Back to the original question, let's circle. Why is present-day AI so costly? The response may be that we simply don't have the right hardware yet, maybe. And, combined with market rewards, the presence of hardware lotteries makes it economically impossible to break out of the current status quo.

Consider the capsule neural network of Geoffrey Hinton, a novel approach to computer vision, as an example. Google scientists Paul Barham and Michael Isard find that on CPUs, this method works fairly well, but on GPUs and TPUs it performs poorly. And the reason? Accelerators, such as regular matrix multiplication, have been designed for the most frequent operations, but lack optimization for capsule convolutions. Their conclusion (which is their paper's title as well): ML structures are caught in a rut.

AI researchers are at risk of 'overfitting' with current hardware, which in the long run would hinder progress in the field.

"The next breakthrough may involve a radically different way of modeling the world with different hardware, software, and algorithm combinations." - Sara Hooker, Google Brain

Memory and computation are not two different elements in the human brain, but instead exist in the same place: neurons. Memory emerges from the way in which synapses wire together neurons, while computation emerges from the way neurons fire and propagate sensory input information. Like early-generation computers, hardware and algorithms are one and the same. This is nowhere close to the way we do AI today.

Even if they perform extremely well in many tasks today, deep neural networks dominated by GPUs and TPUs might not be the way forward in the long run. In the vast landscape of potential combinations of hardware and algorithm architectures, maybe they are just a local optimum.

The path forward begins with the understanding that there are not enough algorithms. For the next AI generation, both hardware and algorithm advances need to be made. AI study was stuck prior to GPUs. It is likely that we will get stuck again without a new hardware breakthrough.

That's all for today. Let me know what do you think about these emerging crisis of high performing hardware and their influence in AI. Next time. I'll come up with another topic. Till then, Stay Safe !! PEACE !!

No comments:

Post a Comment